This Month’s Reports by TechLetter: January 2026

AI, Work, and the New Geography of Power

January feels like the month the AI narrative finally caught up with reality. We can stop pretending we are “early experimenting.” We are now in the phase where public services are being redesigned around AI, governance has turned into a vendor market, and Chinese models quietly dominate the places Western tools cannot reach. The reports below are not about what might happen one day. They read like field notes from systems that are already running.

This is the first TechLetter “This Month’s Reports” of 2026, a series where I round up the papers and briefings that actually move the AI governance, business, and geopolitics conversation forward.

1. Microsoft AI Economy Institute — Global AI Adoption in 2025: A Widening Digital Divide

Microsoft’s AI diffusion report is the piece that quietly reframes the whole month. Instead of asking who has the best model, it asks who is actually using AI, where, and how fast that is changing. The answers are not what most headlines suggest.

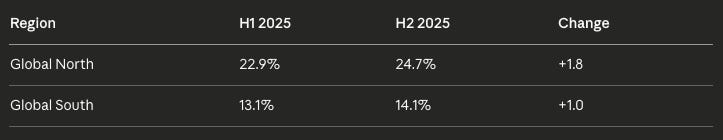

Global AI adoption reached 16.3% of the world’s population by the end of 2025, up from 15.1% in H1. One in six people now use generative AI. Remarkable progress for a technology that barely existed three years ago.

But the more important story is the gap. The distance between the Global North and Global South widened from 9.8 to 10.6 percentage points in just six months, with wealthy countries’ usage growing nearly twice as fast.

Looking from Türkiye, the appendix is a quiet reality check. AI diffusion here reached 14.6% in H2 2025, up from 13.4%. That puts us slightly above the Global South average but well below the global mean, behind neighbours like Jordan (27%) and Lebanon (25.7%). Our 1.2‑point growth rate matches the global average, which means we are neither catching up nor falling further behind.

Top 5: UAE (64%), Singapore (60.9%), Norway (46.4%), Ireland (44.6%), France (44%)

!The US paradox: Despite leading in AI infrastructure and frontier model development, the US ranks just 24th (28.3% adoption), actually falling from 23rd. The country that builds the most advanced AI has a smaller share using it than Belgium or Taiwan.

The report is blunt: “Leadership in innovation and infrastructure, while critical, does not by themselves lead to broad AI adoption.”

South Korea’s surge: The biggest mover, jumping seven spots from 25th to 18th. Usage grew from 26% to over 30% in six months. Total growth since October 2024: 81%, far outpacing both the global average (35%) and the US (25%). Now the world’s second largest ChatGPT subscriber market.

What really opens a new angle is the DeepSeek section. Microsoft treats DeepSeek not as a curiosity, but as a structural factor in the diffusion map. A free chatbot plus an MIT‑licensed model has turned it into the default in parts of China, Russia, Iran, Cuba, Belarus and, increasingly, across Africa, helped by distribution partnerships with players like Huawei.

The report is about global adoption, but the subtext is ecosystem competition: a US‑centric stack versus a China‑centric stack racing to become the everyday default in emerging markets.

Why it is important?

This report quietly kills the idea that “frontier leadership” is enough. If you are in Washington, Brussels or Ankara still designing AI policy around model capability and chips, this is your wake‑up call that diffusion, defaults and distribution are now the real levers of power.

2. Davos 2026: From Potential to Performance

The WEF “From Potential to Performance” report collects real cases of AI delivering measurable performance gains across more than 30 countries and 20 industries. The pattern is almost boring in how consistent it is: the organisations that make AI work embed it into strategy, redesign work around human–AI collaboration, and fix their data foundations instead of throwing one more tool at the problem.

Their jobs and skills report adds four scenarios for 2030, from a “supercharged progress” world where productivity booms but governance lags, through an “age of displacement” where tech outruns reskilling, to a quieter “co‑pilot economy” of gradual transformation. It reads less like a prediction and more like a stress‑testing kit for workforce plans.

Why it is important?

If you read my TechLetter piece “Davos 2026 AI Recap: From Pilots to Infrastructure,” you will recognise the same undercurrent. The interesting thing in Davos this year was not that everyone talked about AI. The WEF reports normalise something many leaders still treat as failure: the fact that AI only works when you re‑architect work and data. If your organisation is still trying to “slot AI in” without touching workflows or incentives, you are not behind schedule, you are on the wrong project plan.

3. Public-Service Reform in the Age of AI

Public services are not just tired, they are structurally out of gas. That is the starting point of Public‑Service Reform in the Age of AI, and it is more honest than most government‑adjacent writing. The authors’ core move is to stop treating AI as a bolt‑on “innovation project” and instead pitch it as the lever to rebuild the operating model of the state itself: always‑on, personalised, preventative services running on digital ID, shared platforms, and a real‑time intelligence layer.

What really caught my eye is how directly they say “managed decline” has become the default, even with record spending. Unless governments replace the labour‑intensive, reactive model, more money just buys longer queues and more burnout, not better outcomes.

Their alternative is a very Blair-era move updated for 2026. AI as a multiplier for human judgement. The NHS app as a core health platform. Digital ID as the connective tissue. Accountability shifting from retrospective inspections to live dashboards. 'Govern by signal and steer.

Why it is important?

This report is less about technology and more about political will. The argument is that governments have not had a coherent theory of public‑service reform since New Labour, and AI gives them a chance to build one. The framing matters: AI is not just a tech upgrade, it is a chance to redefine what the state actually does. If you work in government and still treat AI as a pilot on the side, this paper is politely telling you that you are part of the problem.

4. KDnuggets — Emerging Trends in AI Ethics and Governance for 2026

This one is not a formal report, we may see this as a pulse check on where AI ethics people actually are in 2026. The central line is brutal and accurate: you cannot run yearly policy cycles on systems that ship new versions every week, especially when the CFO suddenly decides to “just automate” bookkeeping.

With this we walk through what adaptive governance looks like in practice: guardrails wired into CI/CD pipelines, automated monitoring flagging ethical drift, living policies that get updated alongside model versions, and sandboxes that behave more like parallel production than safe playgrounds. It also nails a point most enterprises still ignore: AI supply‑chain audits now have to cover pretrained models, third‑party APIs, labelling vendors, and upstream datasets, because your system is only as ethical as the weakest link in that chain.

A note for readers in Türkiye: I’m running AI trainings with Lokomotif AI, covering everything from ethics to governance and adoption. As an AI governance consultant for global companies, I see every day how critical this topic is, and how far many Turkish organisations still have to go. If you’d like the syllabus or a tailor‑made programme for your teams, feel free to reach out.

Two ideas stand out for enterprise teams. First, regulatory sandboxes are evolving from small, fenced pilots into near‑production testing environments that mirror real data, user behaviour, and adversarial conditions. Second, AI supply‑chain mapping is getting serious: leaders want to know where training data came from, how upstream vendors manage risk, and where hidden vulnerabilities enter through third‑party components.

Why it is important?

The piece exposes how fragile most current “responsible AI” decks are. If your ethics framework cannot survive weekly model releases and a changing vendor stack, it is not a framework, it is a slide you show to your board to sleep at night.

If KDnuggets is the trend radar, my TechLetter piece AI Ethics in Action is the field manual. Read together, they make one argument: AI ethics in 2026 is no longer about who has the best principle slide. It is about who can build systems that adapt, log, and course‑correct in real time, while people on the ground still understand who is responsible for what.

5. PwC — 2026 AI Business Predictions

PwC is basically writing the boardroom script for AI in 2026. The predictions lean on a simple idea: “doing AI” is no longer interesting; turning AI into durable business performance is. They focus on pressure points executives actually feel: embedding AI in core strategy, redesigning work around human–AI collaboration instead of just chasing headcount cuts, hardening data foundations, and getting ahead of regulation rather than treating it as an afterthought.

Read together with TechRepublic’s AI Adoption Trends in the Enterprise 2026, you get two layers of the same story. PwC gives the high‑level narrative about where value should come from. TechRepublic shows why so many organisations are still stuck in the messy middle with half‑baked pilots, weak data pipelines, and governance that cannot keep up with weekly model changes. I think of them as the “board slide” and the “reality slide” of enterprise AI: impressive ambitions on top, a lot of unresolved plumbing and responsibility questions underneath.

Why it is important?

These predictions will quietly reset executive expectations. If you are a CDO, CIO or head of AI, this is the bar your board is now reading over coffee. Either you shape that agenda with a realistic roadmap, or external advisors will happily do it for you.

6. AI Adoption Trends in the Enterprise 2026

This one is a good reality check on where enterprises actually are with AI, not where slide decks claim they are. The headline is simple: AI is everywhere in the enterprise, but value is not guaranteed, so CIOs are clustering around seven concrete trends to scale what already exists, modernise data, close skill gaps, and manage the new risk surface.

Under the buzzwords you see a clear pattern. Leaders are shifting focus from “which model” to “which system,” leaning into orchestration, workflow integration, and governance that can cope with weekly model changes.

Why it is important?

This is one of the first mainstream pieces to admit that “AI adoption” metrics are now meaningless on their own. If you report success by counting tools or users instead of business outcomes and risk posture, you are optimising the wrong KPI.

7. US Labor Market Trends

The January chartbook is not an AI report on the surface, but it is an important backdrop for any AI‑and‑work discussion. You see sector‑by‑sector demand shifts, wage dynamics, and the early signs of where automation pressure and labour shortages are going to collide.

Put this next to the Davos conversations on jobs and the WEF scenarios for the future of work and a pattern emerges: leaders are publicly nervous about displacement, while labour markets are still hot in specific skill bands. That gap is exactly where AI will land, either as a bridge or as a wedge.

Why it is important?

These charts make the AI jobs debate less abstract. If you are a company building AI tools, this is basically a map of where you are about to be blamed: the exact occupations and regions where automation and shortages will collide first. In my Davos piece, I called out the “ladder problem” for junior roles and tacit knowledge transfer. These charts help you see where that problem is likely to bite first.

January is about admitting AI has taken over the plumbing. The interesting action is no longer in model demos, it is in adoption curves, labour charts, procurement decisions, and the quiet choices regulators and boards make about who gets to be the default.

If you’re still treating AI as a side project, these reports are a gentle but firm reminder: the systems are already running. The only real question is whether you want a say in how they are wired.

💬 Let’s Connect:

🔗 LinkedIn: [linkedin.com/in/nesibe-kiris]

🐦 Twitter/X: [@nesibekiris]

📸 Instagram: [@nesibekiris]

🔔 New here? for weekly updates on AI governance, ethics, and policy! no hype, just what matters.