The Promise of GPT-4o’s Image Generator

New ethical questions to ask

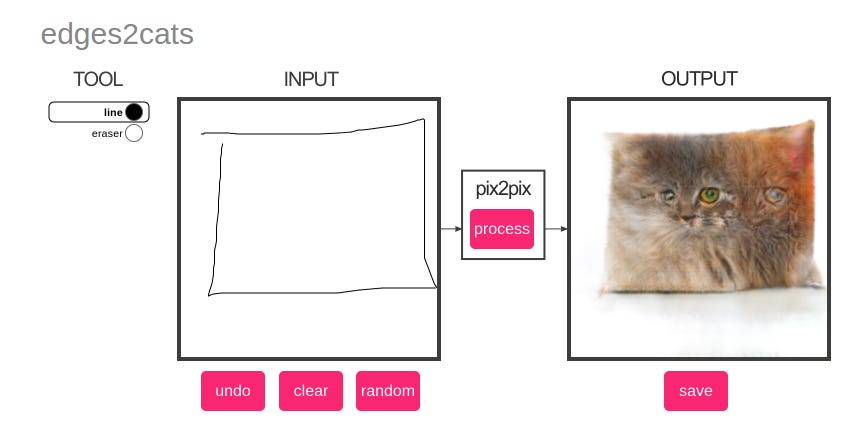

Not long ago, we marveled at AI models that could draw a cat — sort of. The paws were weird, the text unreadable, and any attempt at realism made you appreciate the human hand even more.

Then, it started with a face.

I typed a simple prompt: “a woman speaking at a tech summit, surrounded by geometric light.”

Within a minute, there she was—composed, expressive, rendered in photo-realistic detail. A version of someone who might be me. Or you. Or no one.

That’s when it hit me: the machine doesn’t just draw. It sees—or at least pretends to. It doesn’t just visualize the world—it mirrors the patterns we’ve fed it, silently learning what counts as authoritative, beautiful, or believable. And in that pretense, it absorbs a world of expectations, permissions, and omissions. Which skin tones are “default”? Which facial structures become “professional”? What does it mean when AI begins not just to generate, but to interpret and replicate visual norms?

OpenAI’s new GPT-4o image generation capability is not just a technical development, but also a governance experiment unfolding in real time—a mirror that doesn’t just reflect us, but reinterprets us based on what it’s allowed to see.

So what, exactly, is this new capability?

GPT-4o isn’t just a smarter version of DALL·E. It’s part of a broader shift into omnimodal systems—models that can process and generate across multiple senses: text, audio, image, video. This new image capability doesn’t just enhance aesthetics; it sharpens the model’s ability to “understand” multimodal inputs and act on them with precision.

It introduces:

Photo-realism and control: GPT-4o renders legible text and places up to 20 objects with accurate binding. Designers can specify styles, colors (via hex codes), aspect ratios, or transparent backgrounds.

Multimodal integration: Users can edit, restyle, or reimagine visuals from prompts or uploaded images—blending creative art, product mockups, and concept development into one chat window.

Metadata transparency: All generated images are tagged with C2PA metadata, enabling future provenance checks (though visual watermarks are absent).

The creative leap is obvious. But as the visuals improve, the governance becomes more invisible—less about what users are doing, and more about how models are interpreting intent

The Ethical Boundaries Are There—But Are They Enough?

OpenAI deserves credit for its proactive restrictions: blocking violent or hateful content, steering clear of named public figures, and investing in provenance tools. It’s also collaborated with red teams and ethicists to reduce bias in image outputs. These are steps in the right direction.

Yet the road ahead is riddled with dilemmas that filters and disclaimers alone can’t fix.

A quiet but profound shift underpins this release: OpenAI has restructured its image content moderation approach. What was once a firm “no” to public figures, political symbols, and sensitive visuals is now… a conditional yes.

Let’s break that down:

Public figures like Trump can now be rendered—as long as the context is “neutral or educational.” (What counts as neutral in 2025? This is a subjective line that will be tested often.)

Hateful symbols are allowed in educational settings—but banned if interpreted as glorification.

Body types and racial features can be adjusted—acknowledging that previous refusals were clumsy, sometimes discriminatory.

Living artists’ styles are still protected, but broader studio aesthetics—like “Studio Ghibli” or “Pixar-style”—are fair game.

Opt-out mechanisms exist for public figures who don’t want their likeness used.

OpenAI frames these moves as “reducing refusals” and increasing “user creative autonomy.” But autonomy isn’t neutral. It’s built on defaults that remain largely unexamined—and shaped by a model’s probabilistic interpretation of appropriateness.

And policy refinements—no matter how well-intentioned—don’t erase the cultural power of visual defaults.

While OpenAI avoids generating public figure images by name, users can often circumvent restrictions with clever phrasing. Visual mimicry doesn’t need labels—it just needs cues.

And behind every AI-generated face is a million decisions the user didn’t make—but the model did.

Where the Ethical Cracks Show

Even with these new rules, cracks are visible. Not in the rendering, but in the reasoning.

🔍 Deepfake Risks

Despite content filters, GPT-4o’s image quality makes malicious misuse easier. There are stricter rules around sexual deepfakes and child abuse material. But for political satire, misinformation, or AI-powered disinformation? The boundaries blur fast—and bad actors don’t need perfect replicas. They just need plausible ones.

🎨 Artist Rights and Style Theft

Blocking direct prompts like “in the style of Yayoi Kusama” is good policy. But if the model can still generate Kusama-like dot motifs via indirect cues, have we really protected anything? There’s no registry, no consent mechanism, no shared benefit model. Artists remain invisible contributors to a system that learns from them

📉 Transparency Without Trust

Yes, C2PA metadata exists. But how many users know how to check it—or care? Without visual watermarks or widespread provenance tools, detection becomes academic. In a world dominated by screenshots and social shares, metadata often gets lost before it matters.

🎭 Political Timing and Platform Pressure

The policy updates didn’t emerge in a vacuum. They coincided with U.S. political backlash about perceived bias in AI models. OpenAI says this shift is about technical maturity. But critics see it as platform appeasement. Whether that’s true or not, the perception alone erodes credibility.

What Gets Lost When Everything Is Easily Generated?

There’s another risk that doesn’t get talked about enough: the quiet erosion of creative labor.

When visuals are cheap, instant, and customizable, what becomes of the illustrators, storyboard artists, or brand designers who once made a living from their distinct visual voices? If we increasingly turn to AI for “good enough” art, are we cultivating a visual culture of convenience at the expense of originality?

Some will argue that AI democratizes creativity. And it does—to a point. But true creativity isn’t just about making something new. It’s about making something meaningful. And that still takes time, context, and the human ability to wrestle with ambiguity. When we offload too much of that process, we risk flattening creativity into mere visual output.

A New Model of Moderation — Or an Algorithmic Escape Hatch?

GPT-4o is being trained not just to generate—but to moderate itself. That’s a major shift.

Using a reasoning LLM, OpenAI now feeds policy definitions directly into the model to evaluate content generation against human-written rules. This enables:

Faster rollout of policy updates (hours, not months)

“Chain-of-thought” moderation (models assessing their own decisions)

Fewer humans involved in the loop—especially for routine moderation tasks

This is governance-by-model. Efficient, yes. Scalable, maybe. But democratic? Transparent? Auditable? Those questions remain unanswered.

Moderation is no longer just about what users ask for. It’s about what the model thinks the user meant—and what the model believes is acceptable. That subtle shift from user intent to model judgment is where so much ethical complexity now lives.

What This Means for AI Governance

In many ways, GPT-4o’s image generator is a stress test for responsible innovation. It reveals the difficulty of building policies that can flex across languages, cultures, creative intentions, and misuse risks. But it also reveals the limits of internal alignment.

OpenAI’s framework—while thoughtful—is still company-defined, model-enforced, and politically reactive. We’re witnessing the emergence of a visual culture shaped by machines whose ethical lens is built from probability, not philosophy.

This isn’t just about images. It’s about power.

Who gets to define beauty? Realism? Representation? Consent?

When AI can draw anything, the boundaries we set around what it should draw matter more than ever.

Nesibe’s Take

Here’s what’s sticking with me: This isn’t just a technical release. It’s a cultural intervention.

OpenAI has trained a model to see, imagine, and produce—based on what it’s absorbed from a world that is already unequal, biased, and visually coded in ways we rarely pause to question. And now, that model is helping billions of people shape their own visual outputs, faster than ever.

There’s brilliance in that. But also risk. Not the kind you can patch with metadata or opt-outs, but the kind that demands real cultural reckoning.

We need more than smarter safeguards. We need slower thinking. External audits. Creative rights frameworks. And more space for communities—not just companies—to shape how this technology evolves.

Let’s not just marvel at what AI can generate. Let’s ask what it’s learning to forget—and who pays the price when it does.