Hey everyone! A sunday bite for you :) As the importance of AI continues to grow, we’re seeing more and more global reports being released. Today, I’m excited to dive into the OECD's latest report, published just four days ago, titled "AI, Data Governance, and Privacy: Synergies and Areas of International Co-operation." This report takes a close look at the intersection of artificial intelligence (AI) and privacy, exploring how these two critical areas can be better aligned.

Key Sections of the Report

Opportunities and Risks of Generative AI

Mapping AI and Privacy Principles

National and Regional Developments

Policy Recommendations and Future Steps

Taking a byte out of the latest AI and data privacy updates – because who said tech can't be tasty?

Before diving into the article, if you enjoy my work, please subscribe and share! Because sharing is caring, especially in the world of tech bytes!

Why It Matters

One of the biggest concerns regarding AI is privacy. The lack of established principles and regulatory frameworks only amplifies these worries. Public authorities adopting and ensuring these technologies are reliable encourages public use. AI has the potential to make public administration more efficient, transparent, and accountable. However, we must not overlook the risks related to data privacy and security. Therefore, the ethical and responsible use of AI is paramount. When we talk about data and advanced technologies, focusing solely on local steps and projects won’t cut it. International cooperation on aligning data protection and privacy policies with AI is crucial.

Mapping AI and Privacy Principles

First, let’s dive into the foundational section of the report: mapping AI and privacy principles, highlighting the synergies and collaboration opportunities between AI and privacy policies.

OECD AI Principles and Privacy Guidelines

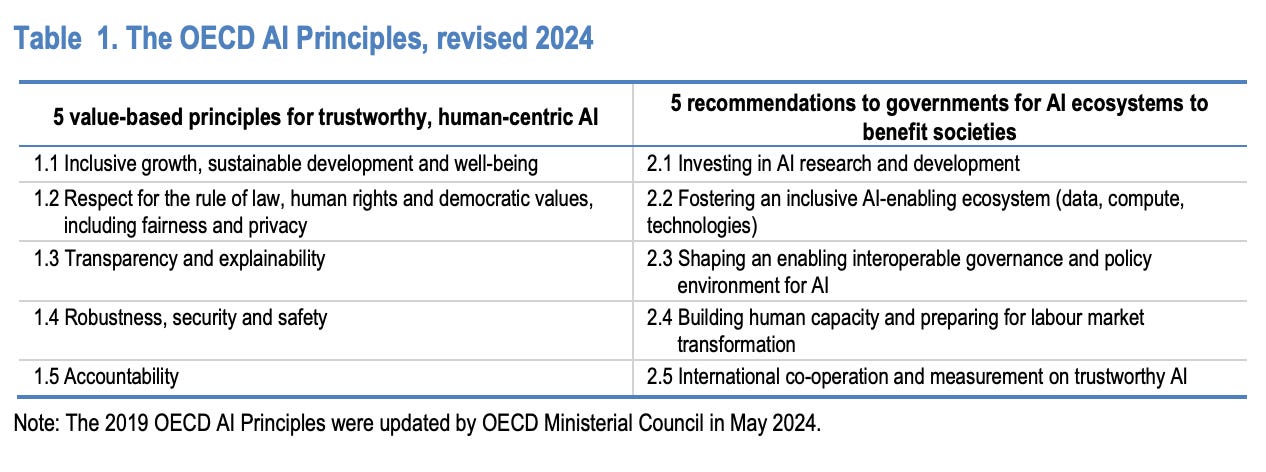

Following the 2018 Universal Guidelines, one of the first documents we had was the 2019 OECD AI Principles, updated just last month.

The OECD AI Principles promote innovative and reliable AI usage while emphasizing respect for human rights and democratic values. These principles serve as a global guide for AI policies and legal frameworks. However, they can be a bit theoretical. We shouldn’t dismiss these developments, but relying solely on principles isn’t enough.

The OECD Privacy Guidelines, adopted in 1980 and updated in 2013, form the foundation of global data protection laws. These guidelines ensure that individuals' rights are protected during data processing and provide a global framework for the fair and legal collection of data. They give us a case study on how today's OECD Guidelines are applied.

Policy Issues and Common Areas

This work aims to identify common policy areas and collaboration opportunities between AI and privacy communities. Reaching the ideal is impossible without these stakeholders working together. That’s why this report is so important to me.

Different Areas:

As for the main differences in the actual report, the disparities in priorities among stakeholders lead to conflicts in these areas.

(I will dive into these principles in detail later in the newsletter. For those who want to get straight to the point, I provided a summary first. Feel free to explore further if you wish.)

National and Regional Developments in AI and Privacy

Globally, developments in AI and privacy at national and regional levels are rapidly increasing. By early 2024, this section provides an overview of notable initiatives at the intersection of AI, data protection, and privacy, enriched with contributions from OECD member and partner countries. Given the fast-paced developments, this list is not exhaustive. This analysis highlights the diversity and complementarity of measures adopted by various authorities, ranging from positive incentives to more coercive actions. By the way, much of this information was also published in your newsletter! :)

Responses from International Privacy Enforcement Authorities

Privacy Enforcement Authorities (PEAs) are working together on various declarations and decisions regarding AI, especially generative AI:

🏳️ Declarations on Generative AI by PEAs (G7, 2023)

🏳️ Global Privacy Assembly’s Resolution on Generative AI (2023)

🏳️ GPA International Enforcement Working Group's Statement on Web Scraping (2023)

🏳️ GPA Resolution on AI and Employment (2023)

Guidelines on Privacy Laws Applied to AI by Privacy Enforcement Authorities

PEAs have launched various initiatives and provided guidelines in response to the increasing use of AI technology:

🇨🇦 Canadian Privacy Regulators published principles for the responsible development and use of generative AI (2023)

🇫🇷 France’s CNIL established an AI department and published guidelines on the processing of personal data (2023)

🇪🇸 Spain’s Data Protection Agency (AEPD) published guidelines on GDPR compliance for AI (2020, 2021)

Türkiye and Other Key Countries

🇹🇷 Türkiye: The Turkish Personal Data Protection Authority (KVKK) published guidelines for the protection of personal data in the field of AI. These guidelines are prepared considering important international sources like the OECD AI Principles and provide specific recommendations for AI developers, producers, service providers, and decision-makers.

🇪🇺 European Union: The EU has been actively working on AI and data protection. Particularly noteworthy are the EU's decisions, regulations, and implementations regarding generative AI. The EU’s AI Act and GDPR aim to ensure the responsible use of AI systems.

🇬🇧 United Kingdom: The UK’s Information Commissioner’s Office (ICO) has published comprehensive guidelines on AI and data protection (2023). Work continues in the field of data protection and AI.

🇺🇸 United States: The Federal Trade Commission (FTC) provided guidelines on the use of algorithms (2020). The FTC also published a blog post on how AI can be used in fairness and equality (2021). The 2023 Executive Order by the US President emphasizes the importance of "red teaming" processes to identify vulnerabilities in AI systems.

🇸🇬 Singapore: The Personal Data Protection Commission published guidelines on the use of personal data in AI decision and recommendation systems. These guidelines include best practices and information that must be provided to consumers when obtaining consent.

Enforcement Actions by PEAs Related to AI and Generative AI

PEAs have carried out enforcement actions at the intersection of AI and privacy, focusing largely on OpenAI, the provider of ChatGPT:

🇨🇦 Canada’s OPC reviewed ChatGPT (2023)

🇮🇹 Italy’s Garante blocked OpenAI’s processing of personal data in Italy (2023)

🇯🇵 Japan’s PPC issued a warning to OpenAI (2023)

🇰🇷 South Korea’s PIPC imposed an administrative fine on OpenAI (2023)

🇬🇧 UK’s ICO fined Clearview AI Inc. and ordered it to stop processing data (2022)

🇧🇷 Brazil’s PEA reviewed the compliance of ChatGPT (2023)

This preliminary review highlights how generative AI service providers comply with data protection laws worldwide and underscores the importance of international cooperation in enforcing privacy laws. These developments continue in the context of reviewing the OECD’s work on protecting cross-border privacy laws (OECD, 2007).

To Sum Up:

Recommendations for the Future

To enhance collaboration and alignment between AI and privacy communities, here are some policy recommendations:

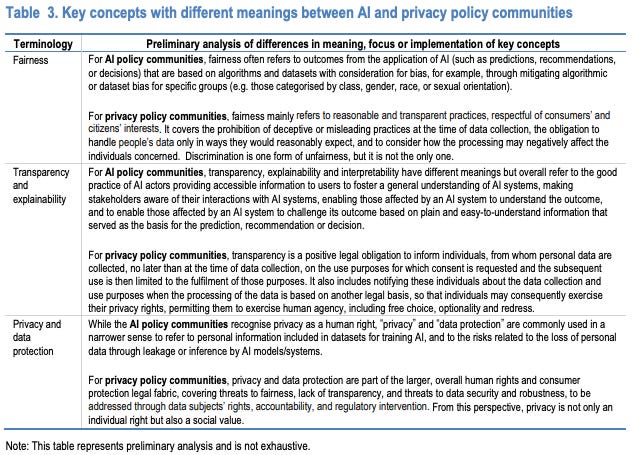

Standardizing Terminology: Bridging language differences between AI and privacy communities will facilitate common understanding and cooperation.

Identifying Common Areas: Pinpointing areas of alignment between AI and privacy principles can help develop joint projects and policies.

Strengthening International Cooperation: Establishing and bolstering international cooperation mechanisms on AI and privacy is crucial.

Recommendations for Governments

Investing in AI Research and Development: Encourage AI investments in both public and private sectors.

Creating an Inclusive AI Ecosystem: Enhance access to data, computing technologies, and other resources.

Shaping a Collaborative AI Management and Policy Environment: Develop national and international policies for effective AI management.

Developing Human Resources and Preparing for Workforce Transformation: Manage the impacts of AI on the workforce.

Promoting International Cooperation and Measurement of Reliable AI: Foster international cooperation in AI policies.

Key Points

Policy Areas: Several policy areas have been identified where collaborative efforts between AI and privacy communities can yield significant benefits. However, synergy is low or non-existent in some areas.

Terminology Differences: Terminology differences between AI and privacy communities can hinder alignment and coordination.

Importance of Practical Guidance

As Luke Munn and David Leslie have pointed out, while principles and values are open to interpretation, they do not provide concrete guidelines to drive safe, fair, and beneficial practices. Hence, we need evidence-based guidance that considers the real harms that might arise in the context of AI systems. As development progresses rapidly, the need for robust practical guidance becomes even more pressing.

Overlapping and Diverging Principles of AI and Privacy in OECD Guidelines

Principle 1.1: Inclusive Growth, Sustainable Development, and Well-being

Environmental Impacts

There are several areas where AI and privacy communities can positively collaborate on environmental outcomes:

Smart City Initiatives

Intersection of Demographic and Environmental Data

However, environmental protection is often outside the scope of privacy and data protection regulations.

Economic Prosperity and Privacy

The positive impacts of AI on economic prosperity are not directly the focus of data protection regulations:

Reducing Product and Service Costs: AI can reduce costs through automation of specific tasks.

Improving Health Outcomes: AI has the potential to improve health outcomes through better predictions in healthcare.

Balancing these benefits with privacy rights and other human rights is crucial.

Disadvantages to Vulnerable Groups

Economic displacement and the impact of AI on jobs are outside the scope of data protection regulations. However:

Privacy Management Programs (PMPs): Data controllers should develop appropriate measures based on privacy risk assessments.

Protection of Vulnerable Groups: Protecting the personal information of vulnerable groups, such as children, is a key focus of OECD’s WPDGP program.

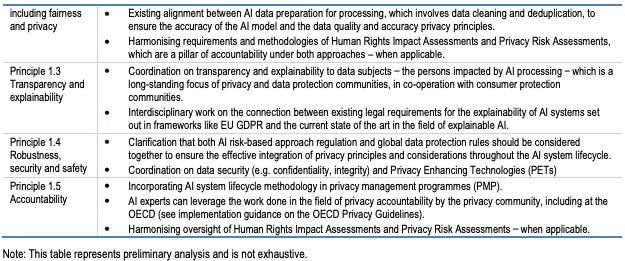

Principle 1.2: Rule of Law, Human Rights, Democratic Values, Fairness, and Privacy

Bias and Discrimination

In the AI Community: Bias and discrimination are critical issues. The widespread use of generative AI increases these risks, stemming from large datasets and foundational models. Sources include historical bias, representation bias, and measurement bias. Creating a fair AI system is challenging due to conflicts among different fairness concepts.

In the Privacy Community: Illegal discrimination is a significant concern. The processing of personal data includes assessing the risk of discrimination. Many countries define certain types of personal data as sensitive, limiting their use (e.g., age, gender discrimination).

Reliable AI and Data Quality: Trustworthy AI relies on reliable data. The data collection process can include socially constructed biases and errors. AI actors aim to work with accurate data to ensure the reliability of outcomes. The OECD Privacy Guidelines emphasize data quality and relevance for purposes.

Terminological differences can impact applications and policy recommendations. Review the report for terminological distinctions.

Key Policy Recommendations:

Justice Discussions: Both communities should understand the different meanings of justice. Mitigating unjust outcomes can be strengthened by harmonizing socio-legal and computational perspectives.

Privacy and Data Protection Practices: These practices should be clarified for the AI community, covering principles like data minimization, purpose limitation, and information rights.

Human Rights and Democratic Values: Privacy laws have extensive experience in managing competing rights and interests, which can be valuable in practical AI use cases. Human rights impact assessments and privacy risk assessments should be aligned more closely between AI and privacy areas.

Principle 1.3: Transparency and Explainability

Transparency and Traceability

Role of Privacy Authorities: Privacy and consumer protection authorities have significant experience in assessing the adequacy of information provided in personal data processing. Data protection laws like GDPR require transparency, clarity, and accessibility of information to individuals.

Model Cards: Used to report fundamental information about machine learning models, covering metrics like bias, fairness, and inclusivity, and providing data on training datasets.

Explainability and Interpretability

NIST Principles: The U.S. National Institute of Standards and Technology (NIST) has outlined four principles for explainable AI systems.

Focus of the Privacy Community: Increasingly, the privacy community focuses on AI explainability to ensure the accuracy, fairness, and accountability of data processing. Explainability is crucial for human oversight and protecting individuals' rights.

Explanation Methods: The Spanish Data Protection Agency (AEPD) suggests various methods to ensure meaningful access to information in automated decision-making or profiling scenarios, including data usage, decision-making weight, and quality of training data.

Terminological Differences: Transparency and Explainability

Different Meanings: AI communities may assign separate meanings to transparency, explainability, and interpretability, while privacy communities often use transparency to encompass all these terms.

Common Goals: Both AI and privacy policies intersect on improving model performance and detecting algorithmic discrimination. Coordination in these areas should be encouraged.

Key Policy Recommendations:

Common Concerns: AI and privacy communities share common concerns about transparency, explainability, and interpretability but may differ in definitions and focal points.

Alignment with Data Protection Laws: Explainability can be designed to improve model performance even if it falls outside data protection laws. Ensuring individuals are informed and can contest AI systems' processes aligns data protection laws with AI policies.

Principle 1.4: Robustness, Security, and Safety

Complexity and Opacity: Challenges of Generative AI

The complexity and opacity of generative AI make it challenging to limit model behavior and negatively impact reliability.

Homogenization and “Algorithmic Monoculture” Risk: Similar algorithms running similar vulnerabilities can make large models more susceptible to single points of failure or attacks. For example, the 2023 ChatGPT data breach exposed user information and login histories.

Alignment with OECD Privacy Guidelines

This principle aligns with the data security principle in the OECD Privacy Guidelines: "Personal data should be protected by reasonable security safeguards against risks such as loss or unauthorized access, destruction, use, modification, or disclosure."

Robustness, Security, and Safety: These concepts overlap with the principles of accuracy and security emphasized in the Global Privacy Assembly’s recent decision on generative AI.

Data Protection and Security

Identity Theft: Examples include consumer harms like identity theft.

Physical or Emotional Harm: Data breaches revealing sensitive information, such as sexual identity or fertility status, can lead to emotional distress, social conflict, and physical harm.

Personal Security: Governments' use of personal data (e.g., political views or ethnic background) can threaten personal security.

Physical Safety and Cybersecurity

Medical Diagnosis and Autonomous Vehicles: Incorrect medical diagnoses, faulty autonomous vehicles, or cyber-attacks on AI-supported power grids pose physical safety risks.

Industry-Specific Security Laws: Security is often combined with cybersecurity regulations and other industry-specific security laws.

Conflict with Data Minimization Principle

Powerful machine learning models typically require large, representative datasets, conflicting with the data minimization principle.

Advancements in machine learning that require less data or process data while preserving privacy can help bridge the gap between developing secure AI models and protecting individuals' privacy rights.

Traditional Cyber Attacks and AI Attacks

Input Manipulation: Attacks that manipulate input to change output or compromise the AI model's integrity.

Tension Between Security and Privacy: Efforts to increase security can sometimes make individuals' data more susceptible to identification risks.

New Approaches and Solutions

These problems require existing frameworks to be supported by new approaches and solutions. NIST’s AI Risk Management Framework and the recent publication on Adversarial Machine Learning provide guidance on this matter.

Generative AI and Security Vulnerabilities

Generative AI models' deception can create security vulnerabilities when used in certain contexts. Governments have started using new tools to identify and manage security risks related to generative AI. For example, the 2023 U.S. Executive Order emphasizes the importance of "red teaming" to identify security vulnerabilities in AI systems.

Key Policy Recommendations:

There is significant potential for alignment and cooperation between AI and privacy policy communities on the risks of data leakage from generative AI models and Privacy-Enhancing Technologies (PETs).

However, more understanding and collaboration are needed to support the AI community's broader approach to long-term privacy rules and standards.

Principle 1.5: Accountability

Basic Information: Both AI and privacy communities work on accountability and risk management.

Resource Investment: Both communities invest significant resources in risk management for AI systems.

OECD Programs: OECD’s WPDGP and WPAIGO programs have developed accountability frameworks, which can be integrated into AI governance.

AI Classification and Accountability

Detailed Framework: OECD’s work is preparing to provide a robust framework identifying risks, stakeholders, and mitigation measures throughout the AI systems' lifecycle.

Responsible Business Practices

OECD Guide: OECD’s Guidelines for Responsible Business Conduct aim to integrate AI and privacy efforts.

That's all for today, folks! I hope you enjoyed our deep dive into the fascinating intersection of AI and data privacy. Remember, the world of tech is always evolving, and staying informed is key to keeping up.

If you found this newsletter insightful, don't forget to subscribe and share with your fellow tech enthusiasts. Your support means the world to me!

Until next time, stay curious and keep biting into those tech updates!