How Every ‘Thank You’ to ChatGPT Adds Up on the Planet’s Energy Bill

Being polite to ChatGPT is costing OpenAI tens of millions of dollars in electricity bills.

Every “thank you” has a footprint. And every prompt nudges a transformer into motion.

At first, it sounds like a headline from a satirical tech blog: Being polite to ChatGPT is costing OpenAI tens of millions of dollars in electricity bills. But this very real statement from OpenAI CEO Sam Altman isn’t just a quirky side note—it’s a window into the environmental costs hidden in the architecture of everyday AI.

It’s easy to think of digital interactions as clean, ephemeral, and consequence-free. But every time we type a polite request into ChatGPT—“Could you explain this, please?”—we're not just talking to a machine. We’re activating one of the most compute-hungry systems ever deployed at scale.

What Happens When You Hit 'Enter'

Generative AI systems like ChatGPT run on large language models—massive neural networks trained on enormous datasets and hosted on energy-intensive GPUs. Those GPUs live in data centres that run and chill 24/7 and powered by vast amounts of electricity.

Each prompt triggers complex computations across layers of the model, generating predictions token by token. The longer the prompt—or the more detailed the response—the more compute power is consumed. And that compute? It doesn’t come free. It’s powered by data centers, which draw electricity, often at unsustainable levels depending on location and source.

🔌 Electricity. Independent analysts estimate ChatGPT now draws roughly 1 billion kWh a year—about ten times the juice of a Google search—translating to $30‑140 million in annual power spend.

Beyond electricity, there’s another overlooked resource: water. Data centers require immense cooling to maintain optimal temperatures for servers processing these requests.

💧 Water. Cooling is the silent twin: lab measurements show a curt reply such as “You’re welcome” evaporates ≈ 50 ml of water; stretch to 100 words and usage in a Washington‑state facility can exceed 1.4 litres. Meta’s LLaMA‑3 training cycle alone drank 22 million litres, enough to hydrate 164 people for a year.

Multiply any of those figures by ChatGPT’s hundreds of millions of weekly users (close to 800 million today) and the scale of “polite padding” becomes blindingly obvious.

As generative AI expands from text into more demanding formats—video synthesis, music creation, immersive simulations—the environmental toll multiplies exponentially. Big Tech’s own sustainability reports reveal unsettling trends: Google's greenhouse gas emissions have surged by 48% since 2019, primarily driven by AI compute demand, while Microsoft’s emissions increased 30% between 2020 and 2023. Despite commitments to carbon neutrality, AI's escalating computational demands raise critical questions about industry transparency and accountability.

These are not abstract numbers. These are the physical consequences of digital convenience.

But why the cost of politeness?

Earlier, more courteous prompts were strategically beneficial. Studies indicated polite interactions led to clearer, more engaged, and less hallucinated responses from AI models. A Future Publishing survey even humorously noted that 18% of Americans remain polite "just in case of a future AI uprising." However, as instruction tuning and reward modeling advanced, these AI systems became significantly more adept at interpreting shorter, more efficient prompts. Today, the polite padding that once enhanced responses has largely become redundant, yet the computational cost persists unchanged.

Why courtesy once mattered—and why it mostly doesn’t now

Instruction tuning taught today’s models to obey even blunt commands (“Summarise this”) without the softeners.

RLHF reward modelling optimises for helpfulness regardless of phrasing.

128 k‑token context windows hold far more conversation state—great for coherence, brutal for watt‑hours.

Turbo‑style models are cheaper and faster yet still spin every extra token through the stack.

Result: the marginal quality benefit of extra polite words has flattened, but the marginal energy (and water) cost remains.

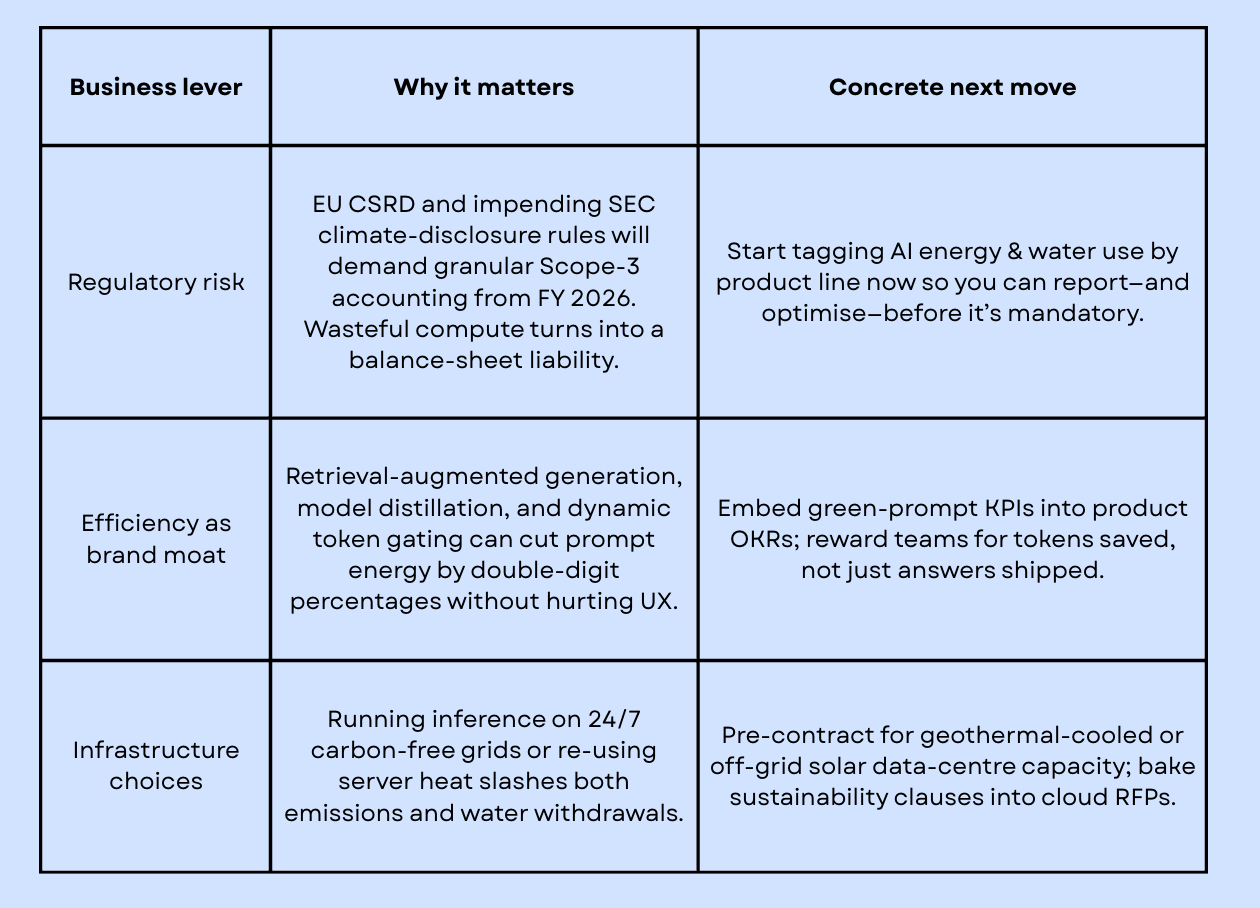

What keeps CFOs and sustainability leads up at night

For the finance and ESG teams, that math shows up in three board‑level worries:

So… should we tell everyone to stop saying “please”?

Absolutely not. The real goal is to decouple expressiveness from resource burn—and to innovate until courtesy is carbon‑free:

Prompt‑optimisation UIs that strip redundant tokens before they reach the GPU.

Pricing models that surface environmental cost alongside latency.

Research into ultra‑low‑power inference chips and liquid‑free cooling.

Politeness is human; inefficiency is optional.

Let’s keep the empathy in our language, trim the waste in our code, and build AI systems that won’t make the planet pay for our good manners.