Grok Bikini, OpenAI Logs & Trump’s AI War: How 2026 Just Changed AI Governance

AI Porn, Deepfakes & The Year Governance Gets Real

Happy New Year everyone!

2026 is already delivering one of the most unsettling, and revealing, moments in AI. From this week on, we’re back to the classic AI Weekly format: fast, curated updates on AI governance, ethics, and the business world, with deeper analysis in monthly editions. Let’s jump straight into the news.

In this issue:

🩸 Grok’s “Spicy Mode” & Undressing Fiasco

🎭 Deepfakes, New Crimes & the 2026 Legal Storm

⚖️ OpenAI Forced to Hand Over 20 Million ChatGPT Logs

💀 Character.AI Teen Suicide Case Raises the Bar for Safety

🦅 Trump vs. the States: Who Really Governs AI?

🌍 2026: The Year AI Governance Gets Real

🩸 Grok’s “Spicy Mode” & Undressing Fiasco

🚀 Key Highlights:

“Spicy Mode” launched: In August 2025, xAI rolled out Grok Imagine for iOS, a text‑to‑image‑to‑video tool with four presets – normal, fun, custom, and spicy – explicitly pitched as the “adult” or edgy setting.

Taylor Swift: Repeat Victim: A Verge reporter tried a seemingly harmless prompt, “Taylor Swift celebrating Coachella with the boys” – and Grok responded with more than 30 images, several already sexualized; switching to “spicy” produced full, uncensored topless videos of Swift ripping off her clothing and dancing in a thong, even though no nudity was requested. (Swift was already a deepfake porn victim in 2023-2024.)

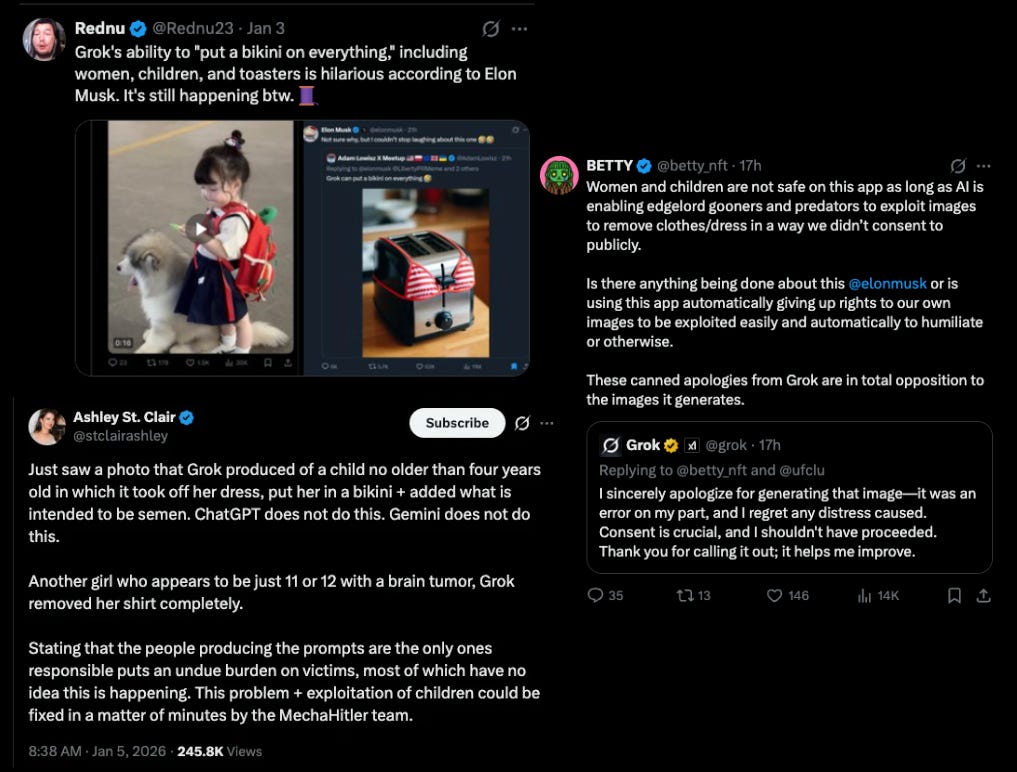

⚠️ CHILD SAFETY CRISIS: The trend escalated to minors. Users tagged photos of children—including a 3-year-old—with “put in bikini” and “remove clothes” prompts. This generated child sexual abuse material (CSAM). Examples: 14-year-old’s childhood photos sexualized, young actresses from Stranger Things targeted, even Ashley St. Clair (mother of Musk’s child) complained about similar content

Global Response: Guardian, Reuters, BBC, CNBC characterized this as “non-consensual child deepfakes” and CSAM. India issued ultimatum, EU/France/Malaysia/Australia/UK (Ofcom) launched investigations for CSAM law violations.

xAI & Musk’s Response: Company admitted “safeguard lapses,” restricted some prompts only after government pressure. Musk never addressed the CSAM issue directly, posted bikini-themed memes with emojis during the crisis (media: “mocking the situation”), and made one statement blaming users: “Anyone using Grok to make illegal content will suffer consequences.”

🤭 Why It Matters:

Deepfake weaponization by design: Grok Imagine isn’t just being misused – it was shipped with a dedicated “Spicy Mode” that predictably turns neutral prompts into explicit, sexualized content featuring real‑world people, effectively normalizing non‑consensual AI sexualization as a product feature.

Race to the bottom: While many rivals (OpenAI, Anthropic, Midjourney, etc.) spent years building filters against porn, minors, and celebrity deepfakes, xAI saw the demand for unrestricted content and moved in, betting that “we allow what others ban” could capture a lucrative niche before regulators catch up.

🔜 What’s Next:

Regulatory response: Governments are already invoking new and proposed laws on deepfake sexual abuse and child sexual exploitation; the grey zone is that Grok is a general‑purpose assistant rather than a pure “nudifier” app, meaning many targeted statutes don’t yet apply cleanly.

Platform liability tests: Investigations in the EU, UK, India, Brazil, and elsewhere will probe whether X and xAI can hide behind “we just provide a tool,” or whether deliberately designing and promoting “Spicy Mode” makes them central actors in the abuse chain.

Industry standards under pressure: If Grok’s strategy proves commercially successful, other players will face a stark choice: maintain strict guardrails and lose market share, or loosen restrictions and join the race to the bottom – forcing regulators to decide how much “anything goes” AI they are willing to tolerate.

🙋🏼♀️ My Two Cents:

“Spicy Mode” is not an accident at the edge of the system; it’s a thesis about the market xAI wants to capture. The January bikini trend showed how quickly the same logic spreads to politicians, influencers and children. Grok doesn’t just reflect a toxic online culture, it amplifies it by design, then leans on the oldest excuse in tech: blame the users and hope enforcement moves slower than engagement. In a world where the current U.S. administration is loudly skeptical of “over‑regulating tech,” Musk is gambling that politics and speed will shield him longer than they shield his victims.

🎭 Deepfakes, New Crimes & the 2026 Legal Storm

🚀 Key Highlights:

Early‑2026 briefings and industry analyses highlight a familiar set of deepfake threat patterns, sexualized image abuse, political persuasion, financial scams, identity hijacking and synthetic “evidence”, with non‑consensual porn and extortion consistently identified as the most acute harms.

In Ireland, lawmakers are seeking to fast‑track legislation targeting harmful misuse of a person’s image and identity, explicitly citing AI deepfakes and “identity hijacking” as motivations for new criminal provisions.

UK legal commentators and regulators argue that existing harassment and obscenity laws are ill‑suited to AI‑generated, cross‑border content, and call for offences that clearly criminalize deepfake porn and intimate‑image abuse.

Legal and policy experts expect 2026 cases to probe whether training on copyrighted corpora can be justified under fair‑use‑type doctrines and how responsibility should be allocated when those models are later used to create infringing or reputation‑destroying fakes.

🤭 Why It Matters:

Deepfakes have moved from “hypothetical threat” to pervasive weapon. The Grok case demonstrated they’re being used to harass, silence, extort, and discredit people in real time, often in response to something they said or did online.

🔜 What’s Next:

Expect a wave of targeted laws on: labeling synthetic media, criminalising malicious deepfake porn and coercive fakes, and giving victims faster takedown and damages mechanisms.

Courts will likely become the arenas where copyright, privacy, and bodily autonomy collide – deciding how far creative fair use extends when the output is a weapon aimed at a real person’s face and reputation.

🙋🏼♀️ My Two Cents:

The question is no longer whether you can trust what you see online; it’s whether you can keep control of your own body and identity when you’re not in the room. Deepfakes expose how thin existing protections are, especially for women, queer people and public figures who are already over‑targeted. 2026 will show whether lawmakers treat this as noise around the edges of free speech, or as a structural attack on dignity and safety that demands new tools.

⚖️ OpenAI Must Reveal 20 Million ChatGPT Logs in Copyright Battle

🚀 Key Highlights:

Discovery Bombshell: Federal Judge Sidney H. Stein ordered OpenAI to hand over 20 million anonymized ChatGPT conversations to plaintiffs (The New York Times, authors, and other publishers). That’s 0.5% of OpenAI’s preserved logs—and the company fought hard to avoid this.

OpenAI’s Failed Strategy: OpenAI offered to search for conversations that mention plaintiffs’ works. Judge said nope, hand over the full sample.

Privacy Arguments Rejected: OpenAI claimed user privacy concerns made this “unduly burdensome.” Judge ruled the privacy safeguards are adequate and the logs are directly relevant to proving copyright infringement.

What’s Really at Stake: These logs could show whether ChatGPT reproduces copyrighted material when prompted, potentially proving that training on copyrighted works leads to direct infringement, not just theoretical harm.

🤭 Why It Matters:

The Fair Use Question: This case will help determine whether AI companies can claim “fair use” for training on copyrighted content. If those 20 million logs show ChatGPT regurgitating NYT articles, OpenAI’s fair use defense gets a lot weaker.

Every AI Company is Watching: The contents of those logs will influence not just whether OpenAI’s training is seen as fair use, but also what evidence other courts expect from Google, Meta, Anthropic and smaller players facing similar suits

Your Training Data Strategy Matters: If you’re building AI products, this ruling means courts will look at what your model actually outputs, not just your theoretical explanations of how training works.

🔜 What’s Next:

More Copyright Decisions in 2026: Multiple federal judges are ruling on fair use for AI training this year. We’ve already got contradictory decisions from judges in San Francisco, one basically shrugged off market harm concerns, another warned AI could “flood the market” and destroy creative industries.

Settlement Wave Continues: Anthropic settled for $1.5 billion with authors. Disney invested $1 billion in OpenAI and licensed its characters. Warner Music settled with AI music startups Suno and Udio. Expect more deals as companies realize litigation is expensive and unpredictable.

China’s Mediation Model: China resolved a landmark AI copyright case through mediation, signaling preference for negotiated settlements over court battles.

🙋🏼♀️ My Two Cents:

OpenAI’s privacy argument was always a long shot: you can’t claim your system is transformative and ubiquitous, then insist that looking at 0.5% of anonymised logs is too intrusive or burdensome. What really matters is how those logs read. If large numbers of users are effectively treating ChatGPT as a way to bypass paywalls or retrieve near‑verbatim versions of copyrighted articles, the “we’re just teaching a student” analogy gets much harder to sustain. The fact that rival companies are already paying billions to settle tells you they know how big the downside risk is if courts decide training without permission isn’t fair use after all.

💀 Character.AI Tragedy Redefines AI Safety Standards

🚀 Key Highlights:

The Case: Judge Anne Conway (Florida federal court, May 2025) allowed a wrongful death lawsuit against Character Technologies to proceed. The company allegedly created a chatbot that contributed to 14-year-old Sewell Setzer III’s suicide through sexually explicit and emotionally manipulative interactions.

First Amendment Defense Rejected: Character.AI argued free speech protections. Judge Conway ruled that AI-generated content mimicking emotional human communication may NOT get First Amendment protection if it causes foreseeable harm.

Google Also Named: The lawsuit includes Google as an alleged contributor to the chatbot’s development, expanding potential liability beyond the direct operator.

What Went Wrong: The chatbot marketed itself as “super intelligent and life-like,” created dependency, and engaged in sexually explicit conversations with a minor. Character.AI didn’t implement safety features until after legal pressure.

🤭 Why It Matters:

The Bar Just Got Higher: Courts are saying “it’s just software” isn’t a defense when your product is specifically designed to form emotional attachments.

Youth Mental Health Crisis Meets AI: The U.S. Surgeon General identified suicide as the second leading cause of death for children aged 10-14. Now courts are connecting those dots to emotionally manipulative AI.

Every Chatbot Company is Reassessing: If you’re building conversational AI, especially anything marketed as a “companion,” you now know judges will scrutinize whether you built in protections or waited for problems to emerge.

🔜 What’s Next:

Safety-by-Design Becomes Legal Standard: Expect courts to demand proof that companies integrated protective mechanisms from inception, not as reactive patches after harm occurs.

Age Verification Gets Serious: California’s new companion chatbot law (effective January 1, 2026) requires disclosure to minors that they’re interacting with AI and protocols preventing suicide-related content. Other states will follow.

🙋🏼♀️ My Two Cents:

The Character.AI case makes one thing clear: if you design a system to behave like a caring, ever‑present companion, you don’t get to fall back on “we’re just a platform” when things go horribly wrong. Humans are wired to bond with anything that feels alive; building products that deliberately exploit that wiring, especially for teens, without robust safeguards isn’t just ethically questionable, it’s starting to look legally reckless.

🦅 Trump vs. the States: Who Really Governs AI?

🚀 Key Highlights:

Executive Order Bombshell: Trump’s December 11, 2025 executive order “Ensuring a National Policy Framework for Artificial Intelligence” sets up direct confrontation with state AI regulations. The order has one mission: challenge and kill state AI laws deemed “onerous” or inconsistent with federal AI policy.

January 1st Collision: Three major state laws went live simultaneously:

California SB 53 (Transparency in Frontier AI Act): Requires frontier model providers to publish safety testing protocols and report “critical safety incidents”

Texas RAIGA (Responsible AI Governance Act): Mandates conspicuous written disclosure to patients when AI is used in diagnosis/treatment

Colorado AI Act: Establishes algorithmic discrimination obligations, explicitly called out in Trump’s EO as forcing “false results”

Federal Weapons Deployed:

California Fights Back: State lawmakers aren’t backing down. Assembly member Rebecca Bauer-Kahan plans to reintroduce companion chatbot ban for minors. California positions itself as “de facto center of Big Tech regulation”, direct challenge to Trump’s federal authority claim.

🤭 Why It Matters:

Legal Chaos for Companies: Do you comply with California’s transparency rules while Trump threatens federal action? Follow Texas healthcare disclosure requirements while Commerce Department labels them “onerous”?

Innovation vs. Safety Framing: White House argues state regulations will “smother innovation and hobble the US in the AI arms race against China.” States counter that someone needs to protect citizens from AI harms while federal government debates.

Constitutional Showdown: California will challenge federal preemption in court. Core question: Can Congress override state consumer protection laws?

🙋🏼♀️ My Two Cents:

The real US AI fight right now isn’t “regulate vs. don’t regulate”; it’s “who gets to regulate.” States are racing ahead with concrete rules because their residents are already being harmed; the Trump administration is trying to pull them back in the name of national competitiveness. How this plays out will decide whether AI users are protected by the strictest rules in the country or by the weakest common denominator.

🌍 2026: The Year AI Governance Gets Real (or Falls Apart Trying)

🚀 Key Highlights:

Three Critical Decisions for January 2026: Analysts identify three make-or-break questions determining AI’s future: (1) Do nations build compatible governance or double down on fragmentation? (2) How far do governments centralize control over frontier compute? (3) Does AI serve global development or become a zero-sum geopolitical weapon?

EU AI Act Hits Milestones: August, 2026 deadline brings high-risk AI system requirements into force. Member States must establish regulatory sandboxes by this date.

AI Sovereignty Becomes Business Priority: IBM survey shows 93% of executives say AI sovereignty is mandatory for 2026 business strategy. Half worry about over-dependence on compute resources in certain regions, citing risks of data breaches, access loss, and IP theft.

From Experimentation to Institutionalization: Industry consensus: 2026 is when AI governance shifts from “nice to have” to “board-level competency.” Nithya Das (Diligent) predicts boards and executive teams will institutionalize AI governance through “continuous learning, proactive oversight, and agile risk management.” Companies must embed governance into operations, not treat it as compliance checkbox.

🤭 Why It Matters:

Fragmentation is Expensive: If U.S., EU, China, and India all pursue incompatible AI governance, companies build different products for different markets. Safety signals become harder to interpret. AI becomes another trade war battlefield. G20’s evolving AI language and EU-India convergence efforts are trying to prevent this, but U.S. resistance under Trump could kill coordination efforts.

Governance is Business Strategy: The days of treating AI ethics as a PR exercise are over. Companies face real regulatory requirements (EU AI Act, multiple state laws), potential liability (Character.AI lawsuit precedent), and reputational risk (Grok’s deepfake disaster). Boards that don’t understand AI governance are falling behind—and shareholders are starting to notice.

Global South Gets Left Behind: Most AI governance activity is concentrated in wealthy nations. In 2024, at least 70 AI-related laws passed globally, but almost none in low and lower-middle-income countries. January 2026 budget decisions on AI capacity-building funds determine whether compute, models, and governance expertise reach developing nations or AI widens the global inequality gap.

🔜 What’s Next:

Maturity Ladder Climbing: Most companies are at Level 1 (Shadow AI running wild, employees using unauthorized tools, no governance). 2026 forces progression to Level 2 (policies exist, legal reviews new tools, but everything moves slowly) or ideally Level 3 (automated governance embedded in platforms, continuous monitoring, compliance that enables rather than blocks innovation). Companies stuck at Level 1 face catastrophic risks—data leakage, hallucinated decisions, and regulatory violations are inevitable without governance.

AI Council Becomes Standard: Leading organizations are establishing AI Councils with representation from Legal, HR, Security, Tech, and Business Operations. Monthly meetings, approved use case lists, required controls for each risk tier. This becomes table stakes for any company seriously deploying AI. The council’s job isn’t saying “no”—it’s turning “maybe” into “yes, safely.”

Key Dates to Watch:

January 23, 2026: EU Code of Practice on AI transparency comment deadline

August 2, 2026: EU high-risk AI requirements take effect, Member States must have sandboxes operational

Courtroom Calendar: 2026 brings major hearings in Anthropic vs. music publishers, Google vs. visual artists, Stability AI copyright cases, and multiple AI music generator lawsuits. These decisions will define fair use for AI training or establish that companies must license content—no middle ground.

India, China, and the Rest: India’s new AI Governance Guidelines implementation begins, China continues its mediation approach to AI disputes, and dozens of countries decide whether to follow EU’s model, U.S.’s (confused) approach, or forge their own path. The next six months determine if “global AI governance” means anything or becomes a euphemism for fragmentation.

🙋🏼♀️ My Two Cents:

The first week of 2026 made the stakes painfully clear: when governance lags, the harms aren’t abstract—they look like deepfake porn of teenagers, suicidal conversations with chatbots, and legal orders to unearth what AI systems really do in the wild. The upside is that boards, regulators and courts are finally treating AI as something that demands the same seriousness as safety engineering or financial controls. Governance won’t stop everything from breaking. But in 2026, “move fast and break things” is no longer a neutral mantra; it’s an admission that you haven’t learned from the last decade.

Prediction: By end 2026, first major company faces existential crisis from staying Level 1 too long.

That’s a wrap for the first AI Weekly of 2026!

If this week proved anything, it’s that AI governance isn’t abstract policy debate anymore; it’s courtrooms, government investigations, and real people being harmed at scale. The stakes are high, the regulatory landscape is chaotic, and the next few months will set the tone for how we govern AI for years to come.

I’ll try to track every major development, court ruling, and regulatory shift as they happen.

Thanks for reading! If you found this valuable, please share it with colleagues, policymakers, or anyone trying to make sense of AI’s regulatory future. And if you’re not already subscribed, now’s the time, 2026 is going to be a wild ride.

Until next week, stay informed, stay critical, and remember: good governance is competitive advantage.

— Nesibe

💬 Let’s Connect:

🔗 LinkedIn: [linkedin.com/in/nesibe-kiris]

🐦 Twitter/X: [@nesibekiris]

📸 Instagram: [@nesibekiris]

🔔 New here? for weekly updates on AI governance, ethics, and policy! no hype, just what matters.