AI Wrapped 2025

A few spoilers before you scroll

Happy New Year, everyone.

Before we rush into what’s next, I wanted to pause for a moment and look backward, and share a few highlights from the past year that I genuinely think you’ll care about.

Over the last year, I did something a bit obsessive but very intentional. I read, cross-checked, and compared more than 70 AI reports, policy documents, market analyses, and research papers. I pulled all of that into a 125-page working document, and eventually shaped it into this deck.

Full 51 page of report is here: AI WRAPPED 2025

Not because I wanted more numbers. We already have too many numbers. I did it because 2025 felt like the year AI stopped being a topic and started being a condition. So this is not a “top trends” wrap-up. It’s my attempt to describe what changed under the surface. Here are a few spoilers that explain what you’re about to read.

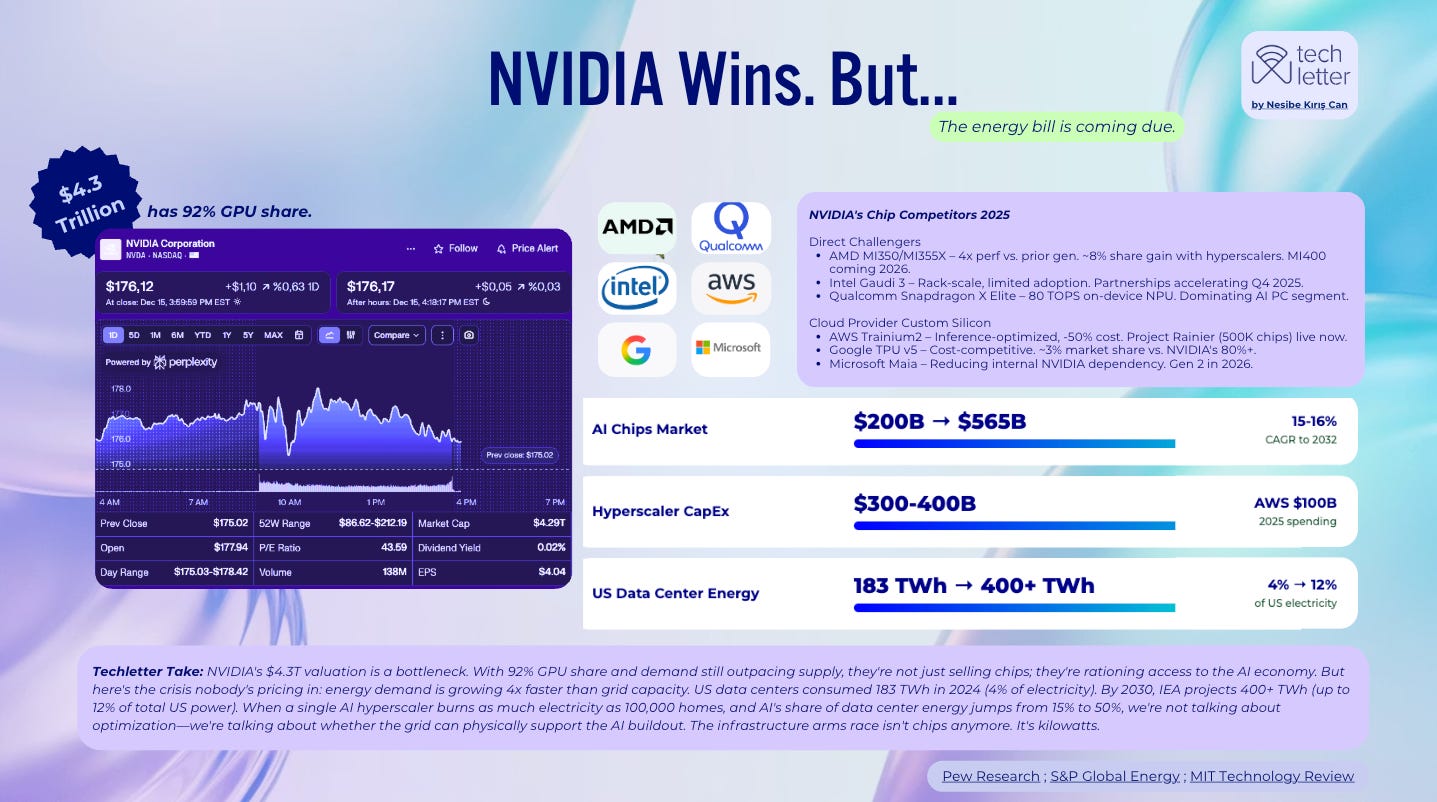

1) The real frontier isn’t models anymore. It’s electricity.

We keep debating which model is “best” while the real competition is happening at a much more boring layer: who can secure compute, power, and physical infrastructure at scale.

NVIDIA is still the chokepoint, but the bigger story is that the AI arms race is quietly turning into a grid problem. When a single hyperscaler can consume electricity at the scale of a small city, “AI strategy” becomes “energy strategy.” And if you’re a business leader, that’s not abstract. It translates into cost volatility, capacity rationing, and an uneven playing field where the winners are the ones who can literally afford to run intelligence.

This is one of the reasons I titled the deck “From Hype to Habitat.” We’re not just adopting software. We’re building an environment.

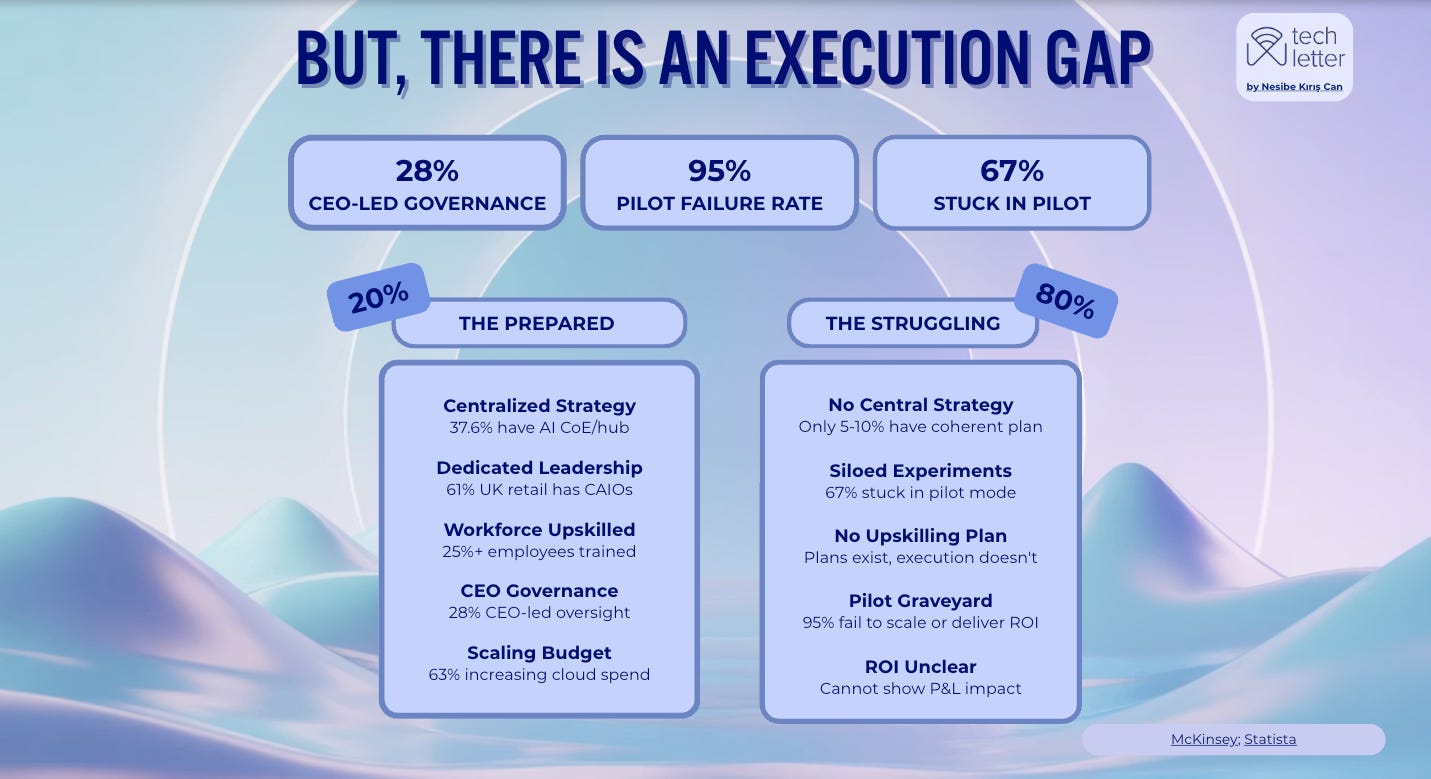

2) Most companies are “using AI.” Very few are compounding value.

One of the most consistent patterns I saw across sources is what I call the implementation gap.

Adoption metrics look impressive, but scaling metrics don’t. A huge share of organizations are still stuck in pilot mode, and most initiatives don’t translate into durable P&L impact. That’s not because the models are weak. It’s because AI is being treated like a tool you plug in, instead of a governed product lifecycle.

The boring truth is that value correlates less with “AI sophistication” and more with foundations: data architecture, ownership clarity, monitoring, and governance readiness. Retail and finance didn’t win because they discovered magic prompts. They won because they already had years of structured data and operational discipline.

AI rewards the organizations that built boring muscles early.

3) Agents are spreading, but they’re landing in the messiest places first.

Agents are spreading first into routine operational decisions. The unglamorous layer of work most companies never governed properly. Processes that are informal, undocumented, and weakly owned. That’s why agentic AI is both exciting and dangerous: it automates the exact parts of organizations that were already running on vibes.

So when something breaks, nobody can answer the question that actually matters: who owned the decision?

This is the shift I think many teams are underestimating. Agents don’t just add automation. They test whether your organization has a real accountability spine.

4) “AI layoffs” became a narrative before it became a reality.

2025 had a wave of restructuring that got branded as “AI transformation.” But the numbers don’t support the idea that AI capability is the main driver of job cuts. In many cases, “AI” is serving as a cover story for margin repair, post-COVID corrections, and reallocation of budgets toward infrastructure and compute.

In other words: AI isn’t replacing humans at scale yet. It’s replacing honesty about why layoffs happen. That distinction matters if you’re trying to anticipate labor impacts, policy responses, and where the next backlash is coming from.

5) Governance stopped being theoretical. Institutions are feeling the strain.

This year, AI moved into places where “move fast and break things” doesn’t work: courts, regulators, public authority, elections, copyright, and national strategy.

And what’s emerging is not global alignment, but fragmentation.

Europe is building enforcement capacity and trying to make compliance real. The US is making positioning moves that often look more like power-grabs than coherent governance. China is treating AI as a control problem, not a market problem. The UK is still stuck in the awkward middle, sounding pro-innovation but offering limited legal certainty.

So if you’re operating across markets, the big risk isn’t just model risk. It’s governance divergence. Different rules, different liabilities, different cultural tolerances for AI harm.

That’s the spine of AI Wrapped 2025.

It’s not meant to reassure you. It’s meant to give you a map. A structural one.

If you only skim one thing, skim the sections on infrastructure bottlenecks, the execution gap, and the agent turn. They explain why 2026 is going to feel less like “new tools” and more like “new constraints.”

And if you read the full deck, I want you to read it the way I built it: not as a hype reel, but as a reality check for people who actually have to make decisions.

Thanks for spending time with it.

The infrastructure bottleneck is the part that feels most underappreciated right now. Everyone's debating model capabilities while energy and compute access is quietly becoming the real moat. I'veseen this play out in smaller teams trying to scale custom implementations - they hit capacity limits before they hit model limits. What's intresting is that this flips the usual SaaS playbook, where distribution used to beat raw resources. Now the winners might just be whoever locked in power contracts early. The governance fragmentation piece also compounds this, since regulatory complexity adds another layer of operational overhead that only well-capitalized players can absorb.