AI and the New Class Lines

Why Personal Assistants Are Creating a New Class System

“Soon everyone will have their own AI.” We hear it constantly, usually said with a kind of moral satisfaction. But when I look at the organisations I train, or even small everyday interactions in the workplace, the gap is obvious. In the same meeting, I see one person using an integrated agent that handles their inbox, calendar, and research… and another person quietly typing into a free chatbot on a second screen.

Technically, both are using AI.

Practically, they are living in different cognitive worlds.

This gap is the story we are not telling enough. The promise that “everyone will have an AI assistant” was supposed to be the moment intelligence became a public utility. But late 2025 tells a different story: personal AI is not entering society as a universal equalizer. It is arriving as a stratifying force: Unlike the broadband divide, which was binary (online vs. offline), the AI divide is gradient. It is about the fidelity of the intelligence you access—the quality, integration, and the degree of amplification people receive.

In earlier TechLetter editions I focused on data and governance gaps and why delayed regulation benefits a small group at the expense of the many. This time the core question is different. We need to look at personal AI as a structure that actively produces new inequalities. More importantly, we need to ask how the same technology could close those gaps if we reshaped ethics and governance around equitable access.

This edition looks at personal AI as an inequality-producing structure across three mechanisms: economic inequality, cultural and linguistic asymmetry, and cognitive and emotional inequality.

1. Economic Axis

The early optimism rested on a simple promise: AI would raise the floor. MIT, Wharton, and Stanford experiments found that LLM assistance improved lower-skilled workers’ performance more than high-skilled workers. Productivity boosts of 12–35% were quoted as proof AI would narrow gaps.

But these were controlled experiments, artificially constrained environments with narrow tasks, equal access, and minimal noise. In controlled settings, AI acts as a leveler because everyone has the same tool and the same tasks. In the real world, differential access, varying task complexity, and unequal ability to evaluate AI outputs reverse this dynamic. By 2025, the dataset changed, real-world data from enterprise productivity tracking and management consultancy analyses show a very different pattern:

Top performers accelerate the most.

They gain approximately 30–45% productivity improvements because they can judge outputs, correct errors quickly, and strategically integrate model suggestions.Median workers gain little.

Gains flatten once routine tasks are automated.Lower performers sometimes fall further behind.

Over-reliance on AI introduces subtle errors, hallucinated assumptions, and miscalibrated decisions—reducing performance in complex tasks.

In other words: AI does not raise the floor. AI raises the ceiling.

Early macro-economic evidence points to a risk. The UNDP (2025) report emphasises that without deliberate policy and redistribution the benefits of AI risk being uneven. While some evidence suggests AI reduces wage gaps within occupations, the broader effects across different skill levels remain uncertain.

Capital concentration and market structure

Frontier AI models require enormous capital expenditure:

This creates strong returns to scale. Training a frontier model costs hundreds of millions of dollars. But once trained, the marginal cost of serving additional users drops dramatically. This cost structure naturally concentrates market power. The consequences are predictable:

Winner-take-most dynamics: A handful of companies control the most capable models

Infrastructure monopolies: Cloud compute, advanced chips, and data pipelines consolidate around Microsoft-OpenAI, Google, Amazon, and a few others

Barriers to entry rise: New entrants cannot compete without billions in capital and access to scarce GPU supply

These dynamics naturally favours incumbents, not because anyone intends it, but because only firms already operating at massive scale can absorb these upfront costs.

These structural shifts filter directly into the labour market:

High-skilled labor: Augmented by AI → productivity rises → wages increase

Low-skilled labor: Displaced by automation → moves to lower-wage service sectors

Mid-skilled labor: Squeezed from both sides → loses pricing power and wage premiums

Global modelling from the WTO adds a further dimension. In its long-term simulations, AI-enabled digitalisation could increase global trade by 34–40% by 2040. But the distribution of benefits is uneven.

If current digital infrastructure gaps persist:

High-income economies may see income gains of around 14%

Low-income economies may gain around 8%

This divergence is driven by disparities in compute access, data infrastructure, and firms’ capacity to integrate AI into production. AI accelerates growth — but accelerates it faster for those already positioned to benefit.

Subscription and access economics

Personal AI itself carries an economic barrier. Effective AI use is increasingly tied to ongoing subscription-based augmentation:

LLM Pro accounts: $20–$40/month

Emerging personal AI agents (2025–2026): $80–$150/month

AI-optimized hardware: $500–$3,000 upfront

Personal AI is becoming a positional good — a technology whose value increases when fewer people have deep, continuous access to it. The threshold for high-quality usage isn’t just a subscription fee; it includes device capability, bandwidth, and the literacy to orchestrate multiple tools together.

This produces a compound access divide:

Who can afford continuous augmentation?

Who works in organisations offering enterprise-grade AI?

This mirrors earlier infrastructural inequalities — broadband, smartphones, quality education — but with a critical difference: personal AI directly mediates productivity. Unlike Netflix or Spotify, the quality of your AI determines the quality of your output. And in knowledge economies, that becomes economic advantage almost instantly.

2. Cultural and Linguistic Axis

What we often call “cultural inequality” in AI is really a quiet battle over meaning: who gets to shape it, who gets to broadcast it, and who gets to have their interpretation recognized as legitimate. AI inserts itself into this space not as a neutral tool, but as a new cultural gatekeeper — one whose defaults overwhelmingly reflect the perspectives of a narrow slice of the world.

When Pierre Bourdieu coined cultural capital, he meant the subtle ways taste, language, and cultural fluency reproduce class. Digital sociologists describe digital capital as a mix of skills, access, and technological advantage, the ability to turn digital tools into social value. In 2025, we’re watching a new extension: AI capital: the scripts, workflows, styles, and tacit knowledge that shape how individuals perform competence in an AI-saturated world.

Research from digital sociology labs shows a stark divide: communities with high digital cultural capital use AI expressively—producing long-form content, shaping identity. Those with lower digital capital use AI functionally—translation, summarization, task completion. Some people use AI to express culture; others merely to access it.

Models reward users who intuitively understand their cultural grammar, the tone, phrasing, and taste markers that optimize results. This is why we could describe AI assistants as positional goods: their value lies not in the tool itself, but in the social meaning of deploying it effectively. Even aesthetics matter: Midjourney’s glossy frames become symbolic currencies conforming to global prestige norms, while indigenous aesthetics get flattened into algorithmic “exoticism.”

AI as a New Gatekeeper of Meaning

Cultural inequality isn’t just about tools; it’s about who gets to define what culture means. Three trends are driving this:

(a) The Algorithmic Canon: LLMs disproportionately amplify narratives from dominant cultural centers—US, UK, Western tech hubs. When everyone writes emails in the same ChatGPT-polished tone, we’re watching a new cultural standard emerge, authored by a handful of companies in San Francisco and London.

(b) Personal AI as Narrative Companion: People who can feed AIs with their tastes and cultural fluency get richer outputs—deeper narrative agency. Others receive generic templates that flatten identity rather than expand it.

(c) The Platform Aesthetic: As cultural production flows through model-generated outputs, we’re drifting toward convergence—AI’s “house style” becomes the default. This is not democratization; it is homogenization. Those who know how to push models off their defaults gain symbolic advantage.

When Language Itself Becomes a Filter for AI Advantage

One of the quietest but most structurally important drivers is language. Researches show over 45% of pre-training corpora originate from English-dominant datasets. Meanwhile, Turkish, Swahili, Amharic, Bengali, Lao, and Indigenous languages appear in trace amounts—the “long tail of linguistic poverty.”

This shapes everything downstream. English-speaking users interact with the version for whom the system was built: richer completions, subtler reasoning, fewer hallucinations, deeper semantic memory. Non-English users get thinner, noisier, culturally mismatched outputs. A 2025 UNDP–UNESCO note: “The quality gap between English and non-English outputs is becoming a new literacy divide.”

Even high-resource languages degrade if phrasing falls outside the “idealized English cognitive frame” embedded in training. You don’t only suffer for speaking Turkish—you suffer for speaking English differently from how Silicon Valley writes it.

As I’ve explored in an earlier TechLetter piece, when systems have sparse linguistic grounding, they start guessing—filling gaps with stereotypes, over-generalizations, cultural flattening. For communities at digital infrastructure margins, this creates a double bind: they lack resources to build their own models, yet available models systematically misrepresent their realities. Linguistic scarcity turns directly into epistemic risk. When a model “hallucinates” culture, it effectively rewrites history for the user who lacks the knowledge to correct it.

Ironically, AI was meant to democratize communication. Instead, the absence of investment in linguistic diversity turns English into an even more entrenched form of global capital. Language itself becomes an access gate to AI’s cognitive benefits.

3. Cognitive and Emotional Axis

If the economic axis is about productivity and the cultural axis is about visibility, the cognitive-emotional axis is about something even more intimate: how much mental load AI can actually remove from your daily life—and for whom.

The crucial mechanism isn’t access alone—it’s the ability to evaluate. The Economist calls this the “judgment premium”: the cognitive capacity to filter, critique, and strategically integrate AI outputs. Those with domain knowledge and contextual intelligence can judge what models produce, catch errors quickly, and use AI as genuine augmentation. Those without this baseline spend cognitive effort verifying, second-guessing, and managing the tool itself.

Several studies indicate that workers with stronger baseline expertise and domain context may experience reduced cognitive burden when using AI tools . Conversely, broader survey data across age and education levels show that younger or less experienced users exhibit lower critical-thinking scores and higher dependence on AI.

“The ability to supervise AI” becomes a differentiating skill. AI doesn’t automate cognition; it reallocates cognitive responsibility upward. Deloitte’s 2024 survey reports that while many workers use AI tools, a large share still say they ‘have to spend time confirming whether the task is done properly or the information is correct’. During interviews, early-career workers noted AI sets higher expectations for performance, yet many lacked confidence in how to use it.

In my trainings, the pattern is almost predictable now. Workers don’t describe AI as augmentation. They describe it as another inbox something to check, correct, triage, supervise. But those with sufficient expertise, institutional support, and cognitive bandwidth experience it differently: for them, AI genuinely reduces load while amplifying output. The promise of cognitive ease often transforms into cognitive oversight.

And there is a sociological dimension here: AI-induced cognitive anxiety is not evenly distributed. This widens a cognitive-emotional gap that mirrors economic divides, creating a form of digital Darwinism: a world where the market rewards not just talent, but the sheer velocity of adaptation permitted by one’s institutional environment.

4. Governance & Mitigations:

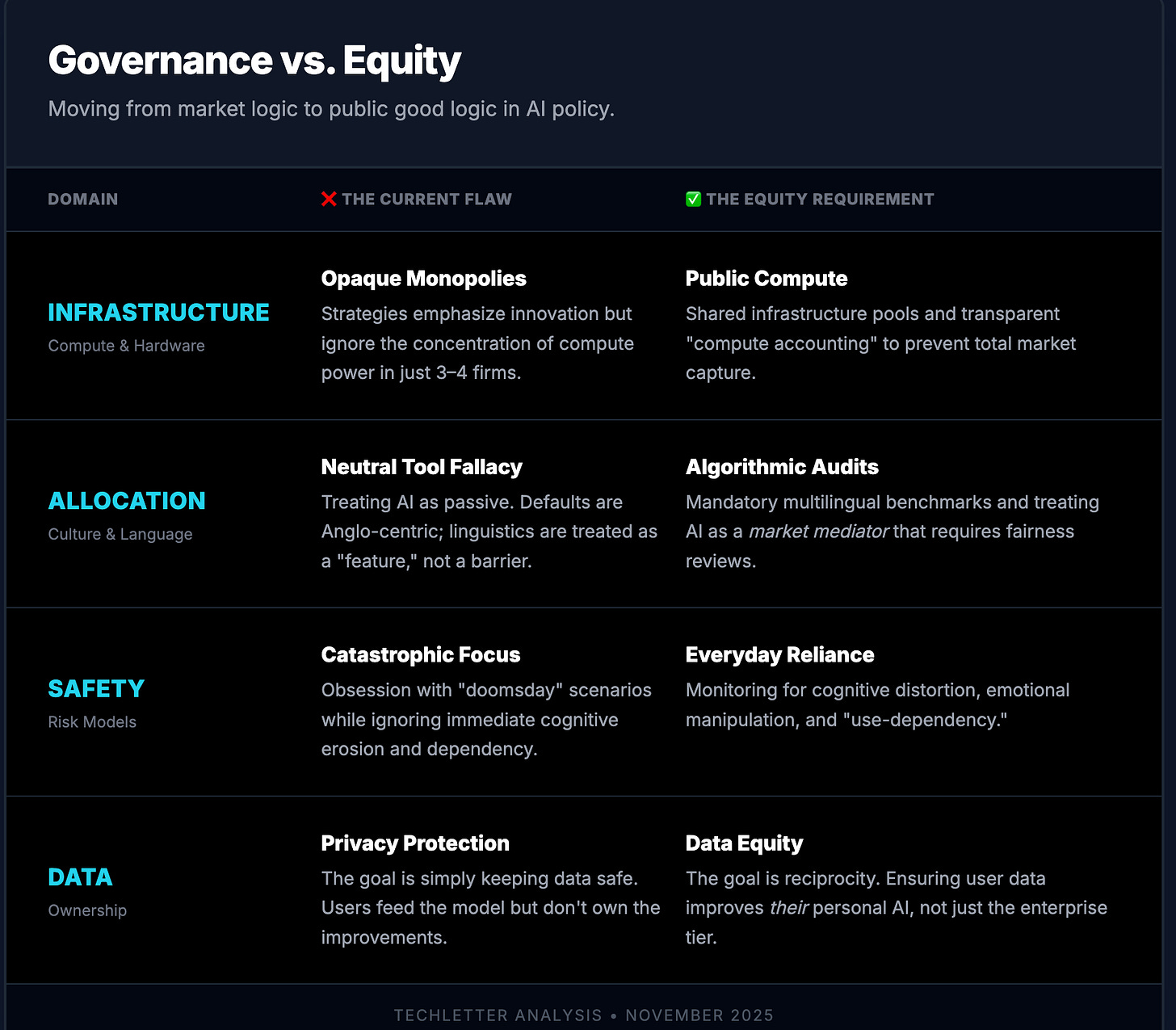

These three axes—economic, cultural, and cognitive—are interconnected. Economic barriers limit access to capable models. Cultural and linguistic asymmetries determine who can use these models effectively. Cognitive inequality determines who benefits versus who merely supervises. Addressing these requires governance interventions across four critical domains: infrastructure, allocation, safety, and data.

On infrastructure, the current model treats compute power as a scarce commodity controlled by a few firms. An equity-oriented alternative would mandate shared infrastructure pools with transparent ‘compute accounting’ to prevent market capture.

On allocation, current AI development ignores the ‘neutral tool fallacy’, treating Anglo-centric defaults as features rather than barriers. Equity requires mandatory multilingual benchmarks and fairness audits.

On safety, governance currently fixates on catastrophic scenarios while neglecting everyday harms like cognitive erosion and dependency. Equity demands monitoring systems that track reliance patterns and emotional manipulation.

On data, the prevailing model keeps users as feeders of models without ownership of improvements. Equity would establish data reciprocity, ensuring users benefit from their contributions to model refinement.

If governance doesn’t rise to this level, the inequalities described above will not be glitches, they will be the operating system of the next decade.

The Real Question Behind “Everyone Will Have an AI Assistant”

By late 2025, “everyone will have their own AI assistant” functions more like a slogan than an empirical claim.

We know, from macroeconomic modeling, that AI will likely widen gaps between high-income and low-income countries unless infrastructure and policy are deliberately re-balanced. We know, from organization-level studies, that AI boosts those who already have complementary skills, authority, and digital capital. We know, from sociological work, that digital tools rarely arrive into a vacuum; they land on top of existing hierarchies and often deepen them.

So the real question is not whether everyone will technically be able to open a chatbot.

The question is: who will have an AI assistant that genuinely expands their agency, security, and voice, and who will be left with thin, unstable, or low-quality access that mostly serves to benchmark them against those with better tools?

If we keep treating personal AI as a lifestyle upgrade, we will drift towards a world where cognitive relief, strategic time, and symbolic polish are concentrated among a relatively small global class. If we treat it as contested infrastructure, with all the governance, equity, and accountability that implies, we get a chance—only a chance—to bend this technology toward something closer to a public good.

“Everyone will have an AI assistant” is not a destiny. It is a policy choice disguised as inevitability.